Conceptually, artificial intelligence hasn’t made progress for decades. There evidently is a roadblock, some kind of prejudice is keeping us from seeing the grand picture. What is this prejudice, what is the roadblock?

All it takes to open the road, I maintain, is giving the correct answer to a simple question: how does the neural tissue in our brain produce and represent the mental phenomena that we experience introspectively?

What is the data structure of the brain,

what is the neural code?

Before you brush away this question as long-since answered, naive, misguided or irrelevant, come along on a little ride and I will convince you that the current answers to this question are grossly insufficient and that the correct answer may be around the corner.

Bits and Neurons

There are two ruling versions of the neural code: bits and neurons – the neurons of artificial neural nets (ANNs). McCulloch & Pitt’s 1943 paper describes both neurons and bits as logical gates. The system they describe is that of a digital machine, and they prove its universality: any logical function can be implemented as a network of logic gates. Bits have the expressive power to represent anything we can imagine: they are universal. A problem is only that McCulloch and Pitts, and indeed the whole fixation on universality, totally ignore one question: where do wiring patterns – logical functions, algorithms – come from? The universality of bits is also their weakness: bits acquire their meaning from the algorithms that generate and interpret them but the design of these algorithms or wiring patterns takes place outside the system: algorithms are designed by humans! As long as that is the case, any intelligence in the machine is nothing but a passive shadow of human thought. And even if one accepted that, creating anything like an autonomous organism in algorithmic fashion is a task beyond the economic power of mankind.

The other interpretation McCulloch & Pitts offered for their logical circuits was seeing their gates as neurons, as logical propositions, propositions that are either true or false (neuron ON or OFF). Whereas the bit-representing gates or memory cells of the computer are mere way-stations for bit patterns that flow through them, neurons have meaning permanently fixed to them. This interpretation has remained the basis for the code of the neural camp until today: each neuron is an elementary symbol (for some more or less complex mental entity), and the only degree of freedom of a neuron is to be active or silent, making the mental entity present in the current state of mind or absent from it. (This interpretation cannot possibly be wrong, according to a whole industry of neurophysiological recording of single cells. But there may be something missing.)

The attraction of ANNs is their ability to structure their wiring by learning and self-organization. This creates the vision of generating an artificial thinking mind: initialize a simple structure, expose it to a complex environment and it learns to deal with it: just as our own mind has been generated on the basis of a little genetic information and some years of training. The great weakness of the ANN data structure is, however, very limited expressive power, its inability to generate and express the mental universe we experience, as I will argue.

Preempting that, let me conclude that both AI and the attempt to understand the function of the brain are stalled because of deficient answers to the neural code question.

Bits are universal but programing them is intractable,

neurons self-organize but have too little expressive power.

The Two Camps

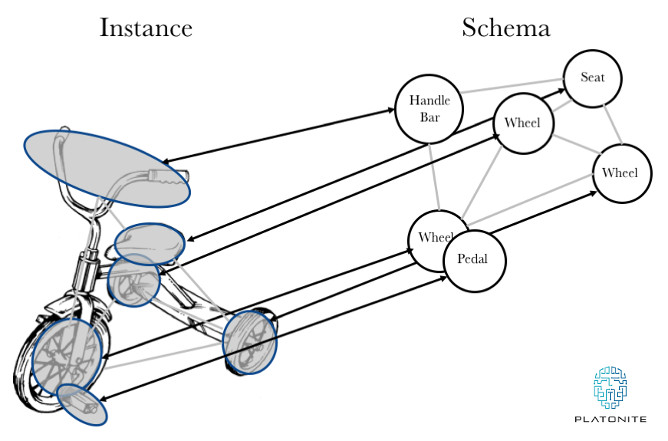

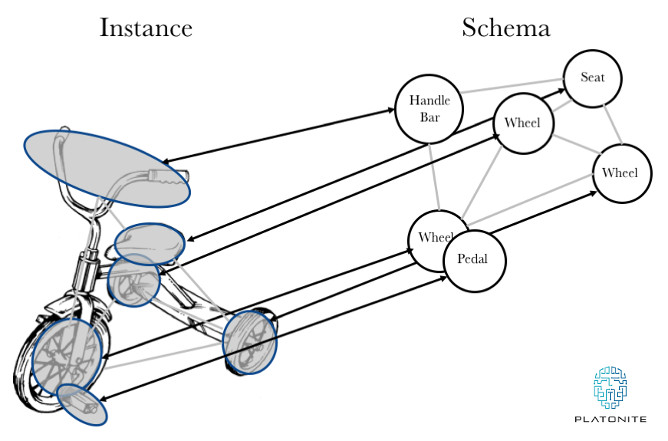

Bits and neurons each have their own camps and ardent supporters, each with convincing arguments in favor of their own version of the code and against that of the other camp. Algorithmically controlled bits form the mindset of classical AI, and that camp insists on the importance of symbol processing for the functioning mind. Examples of symbol processing are forming or parsing sentences, comprehending objects or situations or generating purposeful action sequences. The essence of this mode of comprehension or structure generation lies in the coupling of an abstract structural description, called a schema, to a concrete instance. A schema (a term often attributed to Immanuel Kant) is an arrangement of symbols, the decisive process being the coupling of general schemas to instances. We parse a sentence like “Mary gave Tom a book” by recognizing in it the syntactical schema “subject – verb – indirect object – direct object”. We then understand the sentence by constructing a representation of a more or less concrete scene by interpreting the words themselves as schemas – Mary and Tom as persons, “gave” as describing the action of transferring something from one person to another and so on – and invoking the more abstract schemas behind the syntactical constituents – “subject” as schema of an agent performing an action, etc. – and connecting all these schemas and their instantiations into a scene representation.

Schema-based Comprehension. Concrete objects are comprehended as instances of general schemas. A schema represents the common structure of a broad range of instances, with the help of “nodes” standing for object parts (wheels, seat, handle bar, pedals in the example) and “links” representing the relationships of the parts (below, right-of, behind etc., in object-internal coordinates in this case). The schema is put in relationship to the instance with the help of another type of links (black double arrows), forming a homeomorphy mapping: seat instance to seat node and so on, preserving internal link structure between object and schema. (Management of the mapping is not trivial, as the 3D spatial relations inside the schema have to be translated into the 2D relations inside the image depending on the pose.) The nodes of the schema are themselves structured sub-schemas, recognizing their referents in the instance on the basis of homeomorphy – the right features in the right arrangement.

Schema application has been one of the dominant ideas of algorithmic AI – Marvin Minsky spoke of frames, for instance, Roger Schank of scripts – and they tried them on various applications in language and vision.

There is little reason to doubt the validity of this approach. It captures a large chunk of what we call cognition, is typical of what is meant by symbolic processing, and it should set the style in which to realize AI. Now, the heroes of that period of AI in the 1970s didn’t succeed with it beyond little toy examples. One of the reasons – lameness of computers – is quickly disappearing now, but a fundamental impediment for the approach was and is lack of the homogeneous, generic data-and-process architecture that would be needed to describe instances and schemas as well as the relations between them.

The schema approach will fly only on the basis of a proper neural code.

Neural partisans’ main reservation against the symbolic camp is that symbols as mere tokens lack body and substance, in distinction to neural systems with their rich sets of feature-neurons. The symbolic camp, however, rightly retorts that the neural camp has no way of keeping the sets of features of different objects separate from each other (the binding problem) or to even speak about the arrangement and relations of symbols constituting a schema or of the relations between the elements of schema and instance. It is true, models of symbolic processing tend to restrict their attention to token-symbols instead of making explicit the extended hierarchies of schemas that give them body and substance in the mind of the person contemplating the symbols on paper. The controversy recalls Immanuel Kant’s dictum “thoughts without content are empty, intuitions without concepts are blind”. What is needed is a data architecture that combines concepts with content, symbols with body and substance. The problem must have a solution, as demonstrated by the brain! The vexing situation is that it is all in front of our eyes when we look through the microscope at neural tissue – we apparently only have to interpret it the right way.

Self-Organization

Understanding cognitive representation is one thing, creating it is another. On the one hand, our brain (indeed our whole organism) is created on the basis of a mere one gigabyte of genetic information, and all the sensory experience an infant needs in order to build a representation of its world doesn’t amount to much information either. On the other hand, our brain is very complex, containing 80 billion neurons, connected by an estimated 1013 or 1014 synapses. Noting down this wiring diagram would need a petabyte of information, a million times more than the gigabytes that go into creating it. Assuming this complexity to be no superfluous luxury or random junk we must reckon with a powerful mechanism of organization. This mechanism is not based on external agents (like in a factory producing cars or computers) but must be based on self-interaction. Beginning with an initial structure, the system goes through cycles, at each state the existing system generating activity that acts back on its structure, modifying and differentiating it. Once the brain and its macroscopic structure has been made this way, the process can from then on be described as network self-organization.

Network self-organization has been well studied, both theoretically and experimentally, while studying the generation of regular connectivity patterns in the brain (retinotopy, orientation maps, ocularity domains etc.). Beyond any doubt, it is the mechanism by which the wiring of the brain is structured. When searching for the neural code we have to look for those connectivity patterns that are generated and stabilized by this mechanism. These patterns likely are the building blocks of our cognitive space, building blocks that can link up in infinite ways to form larger nets with the same property of being stable under self-interaction.

One way to look at mind, intelligence and creativity is to see them as a search problem. Indeed it has been said that classical AI was based on converting everything to a search problem, then only to get lost in the vastness of combinatorial spaces that no computer on earth could fathom.

If bits are too unstructured and classical neurons too narrow, how does the neural code sail safely between those two cliffs?

How can a system with the powerful prejudice of insisting on a code based on the mechanical laws of network self-organization not be too narrowly biased, how can it be wide enough to define a mental space capturing the world, and more.

I dread, though, that anyway there is no escape from the conclusion that the “mechanical” laws of a material nervous system are able to create the mental universe. One may take it as an encouraging sign that nets with the structure of a topological space are supported by network self-organization (exciting short-range connections generating short-range correlations favoring in turn those short-range connections) and a lot of our thinking is indeed structured by space or spatial metaphors; and that the homeomorphism relationship that relates schemas to instances – graph structure-preserving one-to-one mappings – seems to be efficiently identifiable by network self-organization.

All I want to say is that solving the neural code issue means opening the door to understanding the mind and replicating it in silico.

Conceptually, artificial intelligence hasn’t made progress for decades. There evidently is a roadblock, some kind of prejudice is keeping us from seeing the grand picture. What is this prejudice, what is the roadblock?

All it takes to open the road, I maintain, is giving the correct answer to a simple question: how does the neural tissue in our brain produce and represent the mental phenomena that we experience introspectively?

What is the data structure of the brain,

what is the neural code?

Before you brush away this question as long-since answered, naive, misguided or irrelevant, come along on a little ride and I will convince you that the current answers to this question are grossly insufficient and that the correct answer may be around the corner.

Bits and Neurons

There are two ruling versions of the neural code: bits and neurons – the neurons of artificial neural nets (ANNs). McCulloch & Pitt’s 1943 paper describes both neurons and bits as logical gates. The system they describe is that of a digital machine, and they prove its universality: any logical function can be implemented as a network of logic gates. Bits have the expressive power to represent anything we can imagine: they are universal. A problem is only that McCulloch and Pitts, and indeed the whole fixation on universality, totally ignore one question: where do wiring patterns – logical functions, algorithms – come from? The universality of bits is also their weakness: bits acquire their meaning from the algorithms that generate and interpret them but the design of these algorithms or wiring patterns takes place outside the system: algorithms are designed by humans! As long as that is the case, any intelligence in the machine is nothing but a passive shadow of human thought. And even if one accepted that, creating anything like an autonomous organism in algorithmic fashion is a task beyond the economic power of mankind.

The other interpretation McCulloch & Pitts offered for their logical circuits was seeing their gates as neurons, as logical propositions, propositions that are either true or false (neuron ON or OFF). Whereas the bit-representing gates or memory cells of the computer are mere way-stations for bit patterns that flow through them, neurons have meaning permanently fixed to them. This interpretation has remained the basis for the code of the neural camp until today: each neuron is an elementary symbol (for some more or less complex mental entity), and the only degree of freedom of a neuron is to be active or silent, making the mental entity present in the current state of mind or absent from it. (This interpretation cannot possibly be wrong, according to a whole industry of neurophysiological recording of single cells. But there may be something missing.)

The attraction of ANNs is their ability to structure their wiring by learning and self-organization. This creates the vision of generating an artificial thinking mind: initialize a simple structure, expose it to a complex environment and it learns to deal with it: just as our own mind has been generated on the basis of a little genetic information and some years of training. The great weakness of the ANN data structure is, however, very limited expressive power, its inability to generate and express the mental universe we experience, as I will argue.

Preempting that, let me conclude that both AI and the attempt to understand the function of the brain are stalled because of deficient answers to the neural code question.

Bits are universal but programing them is intractable,

neurons self-organize but have too little expressive power.

The Two Camps

Bits and neurons each have their own camps and ardent supporters, each with convincing arguments in favor of their own version of the code and against that of the other camp. Algorithmically controlled bits form the mindset of classical AI, and that camp insists on the importance of symbol processing for the functioning mind. Examples of symbol processing are forming or parsing sentences, comprehending objects or situations or generating purposeful action sequences. The essence of this mode of comprehension or structure generation lies in the coupling of an abstract structural description, called a schema, to a concrete instance. A schema (a term often attributed to Immanuel Kant) is an arrangement of symbols, the decisive process being the coupling of general schemas to instances. We parse a sentence like “Mary gave Tom a book” by recognizing in it the syntactical schema “subject – verb – indirect object – direct object”. We then understand the sentence by constructing a representation of a more or less concrete scene by interpreting the words themselves as schemas – Mary and Tom as persons, “gave” as describing the action of transferring something from one person to another and so on – and invoking the more abstract schemas behind the syntactical constituents – “subject” as schema of an agent performing an action, etc. – and connecting all these schemas and their instantiations into a scene representation.

Schema-based Comprehension. Concrete objects are comprehended as instances of general schemas. A schema represents the common structure of a broad range of instances, with the help of “nodes” standing for object parts (wheels, seat, handle bar, pedals in the example) and “links” representing the relationships of the parts (below, right-of, behind etc., in object-internal coordinates in this case). The schema is put in relationship to the instance with the help of another type of links (black double arrows), forming a homeomorphy mapping: seat instance to seat node and so on, preserving internal link structure between object and schema. (Management of the mapping is not trivial, as the 3D spatial relations inside the schema have to be translated into the 2D relations inside the image depending on the pose.) The nodes of the schema are themselves structured sub-schemas, recognizing their referents in the instance on the basis of homeomorphy – the right features in the right arrangement.

Schema application has been one of the dominant ideas of algorithmic AI – Marvin Minsky spoke of frames, for instance, Roger Schank of scripts – and they tried them on various applications in language and vision.

There is little reason to doubt the validity of this approach. It captures a large chunk of what we call cognition, is typical of what is meant by symbolic processing, and it should set the style in which to realize AI. Now, the heroes of that period of AI in the 1970s didn’t succeed with it beyond little toy examples. One of the reasons – lameness of computers – is quickly disappearing now, but a fundamental impediment for the approach was and is lack of the homogeneous, generic data-and-process architecture that would be needed to describe instances and schemas as well as the relations between them.

The schema approach will fly only on the basis of a proper neural code.

Neural partisans’ main reservation against the symbolic camp is that symbols as mere tokens lack body and substance, in distinction to neural systems with their rich sets of feature-neurons. The symbolic camp, however, rightly retorts that the neural camp has no way of keeping the sets of features of different objects separate from each other (the binding problem) or to even speak about the arrangement and relations of symbols constituting a schema or of the relations between the elements of schema and instance. It is true, models of symbolic processing tend to restrict their attention to token-symbols instead of making explicit the extended hierarchies of schemas that give them body and substance in the mind of the person contemplating the symbols on paper. The controversy recalls Immanuel Kant’s dictum “thoughts without content are empty, intuitions without concepts are blind”. What is needed is a data architecture that combines concepts with content, symbols with body and substance. The problem must have a solution, as demonstrated by the brain! The vexing situation is that it is all in front of our eyes when we look through the microscope at neural tissue – we apparently only have to interpret it the right way.

Self-Organization

Understanding cognitive representation is one thing, creating it is another. On the one hand, our brain (indeed our whole organism) is created on the basis of a mere one gigabyte of genetic information, and all the sensory experience an infant needs in order to build a representation of its world doesn’t amount to much information either. On the other hand, our brain is very complex, containing 80 billion neurons, connected by an estimated 1013 or 1014 synapses. Noting down this wiring diagram would need a petabyte of information, a million times more than the gigabytes that go into creating it. Assuming this complexity to be no superfluous luxury or random junk we must reckon with a powerful mechanism of organization. This mechanism is not based on external agents (like in a factory producing cars or computers) but must be based on self-interaction. Beginning with an initial structure, the system goes through cycles, at each state the existing system generating activity that acts back on its structure, modifying and differentiating it. Once the brain and its macroscopic structure has been made this way, the process can from then on be described as network self-organization.

Network self-organization has been well studied, both theoretically and experimentally, while studying the generation of regular connectivity patterns in the brain (retinotopy, orientation maps, ocularity domains etc.). Beyond any doubt, it is the mechanism by which the wiring of the brain is structured. When searching for the neural code we have to look for those connectivity patterns that are generated and stabilized by this mechanism. These patterns likely are the building blocks of our cognitive space, building blocks that can link up in infinite ways to form larger nets with the same property of being stable under self-interaction.

One way to look at mind, intelligence and creativity is to see them as a search problem. Indeed it has been said that classical AI was based on converting everything to a search problem, then only to get lost in the vastness of combinatorial spaces that no computer on earth could fathom.

If bits are too unstructured and classical neurons too narrow, how does the neural code sail safely between those two cliffs?

How can a system with the powerful prejudice of insisting on a code based on the mechanical laws of network self-organization not be too narrowly biased, how can it be wide enough to define a mental space capturing the world, and more.

I dread, though, that anyway there is no escape from the conclusion that the “mechanical” laws of a material nervous system are able to create the mental universe. One may take it as an encouraging sign that nets with the structure of a topological space are supported by network self-organization (exciting short-range connections generating short-range correlations favoring in turn those short-range connections) and a lot of our thinking is indeed structured by space or spatial metaphors; and that the homeomorphism relationship that relates schemas to instances – graph structure-preserving one-to-one mappings – seems to be efficiently identifiable by network self-organization.

All I want to say is that solving the neural code issue means opening the door to understanding the mind and replicating it in silico.